In computer graphics, a texture represents image data that wraps a 3D model. For example, the image below shows a model with a texture.

In this article, I will teach you how to apply a texture to a 3D model.

Before I start, I recommend you to read the prerequisite materials listed below:

Prerequisite:

How does a GPU apply a Texture?

As you recall, a rendering pipeline consists of a Vertex and a Fragment shader. The fragment shader is responsible for attaching a texture to a 3D model.

To attach a texture to a model, you need to send the following information to a Fragment Shader:

- UV Coordinates

- Raw image data

- Sampler information

The UV coordinates are sent to the GPU as attributes. Fragment Shaders can not receive attributes. Thus, UV coordinates are sent to the Vertex Shader and then passed down to the Fragment Shader.

The raw image data contains pixel information such as the image RGB color information. The raw image data is packed into a Texture Object and sent directly to the Fragment Shader. The Texture Object also contains image properties such as its width, height, and pixel format.

Many times, a texture does not fit a 3D model. In this instances, the GPU will be required to stretch or shrink the texture. A Sampler Object contains parameters, Filtering and Addressing, which inform the GPU how to wrap or stretch a texture. The Sampler Object is sent directly to the Fragment Shader.

Once this information is available, the Fragment Shader samples the supplied raw data and returns a color depending on the UV-coordinates and the Sampler information. The color is applied fragment by fragment onto the model.

UV coordinates

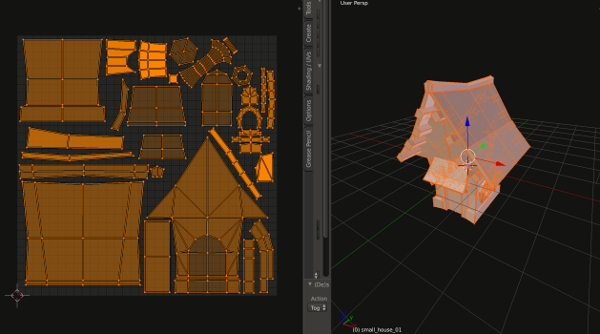

One of the first steps to adding texture to a model is to create a UV map. A UV map is created when you unwrap a 3D model into its 2D equivalent. The image below shows the unwrapping of a model into 2D.

By unwrapping the 3D character into a 2D entity, a texture can correctly be applied to the character. The image below shows a texture and the 3D model with texture applied.

During the unwrapping process, the vertices of the 3D model are mapped into a two-dimensional coordinate system. This new coordinate system is known as the UV Coordinate System.

The UV Coordinate System is composed of two axes, known as U and V axes. These axes are equivalent to the familiar X-Y axes; the only difference is that the U-V axes range from 0 to 1. The U and V components are also referred as S and T components.

The new vertices produced by the unwrapping of the model are called UV Coordinates. These coordinates will be loaded into the GPU. And will serve as reference points to the GPU as it attaches the texture to the model.

Luckily, you do not need to compute the UV coordinates. These coordinates are supplied by modeling software tools, such as Blender.

Decompressing Image Data

For the fragment shader to apply a texture, it needs the RGBA color information of an image. To get the raw data, you will have to decode the image. There is an excellent library capable of decoding ".png" images known as LodePNG created by Lode Vandevenne. We will use this library to decode a png image.

Texture Filtering & Wrapping (Addressing)

Textures don't always align with a 3D model. Sometimes, a texture needs to be stretched or shrunk to fit a model. Other times, texture coordinates may fall out of range.

You can inform the GPU what to do in these instances by setting Filtering and Wrapping Modes. The Filtering Mode lets you decide what to do when pixels don't have a 1 to 1 ratio with texels. The Wrapping Mode allows you to choose what to do with texture coordinates that fall out of range.

Texture Filtering

As I mentioned earlier, there is never a 1 to 1 ratio between texels in a texture map and pixels on a screen. For example, there are times when you need to stretch or shrink a texture as you apply it to the model. This will break up any initial correspondence between a texel and a pixel. Because of this, the color of a pixel needs to be approximated to the closest texel. This process is called Texture Filtering.

Note: Stretching a texture is called Magnification. Shrinking a texture is known as Minification.

The two most common filtering settings are:

- Nearest Neighbor Filtering

- Linear Filtering

Nearest Neighbor Filtering

Nearest Neighbor Filtering is the fastest and simplest filtering method. UV coordinates are plotted against a texture. Whichever texel the coordinate falls in, that color is used for the pixel color.

Linear Filtering

Linear Filtering requires more work than Nearest Neighbor Filtering. Linear Filtering works by applying the weighted average of the texels surrounding the UV coordinates. In other words, it does a linear interpolation of the surrounding texels.

Texture Wrapping

Most texture coordinates fall between 0 and 1, but there are instances when coordinates may fall outside this range. If this occurs, the GPU will handle them according to the Texture Wrapping mode specified.

You can set the wrapping mode for each (s,t) component to either Repeat, Clamp-to-Edge, Mirror-Repeat, etc.

- If the mode is set to Repeat, it will force the texture to repeat in the direction in which the UV-coordinates exceeded 1.0.

- If it is set to Mirror-Repeat, it will force the texture to behave as a Repeat mode but with a mirror behavior; thus flipping the texture.

- If it is set to Clamp-to-Edge, it will force the texture to be sampled along the last row or column with valid texels.

Metal has a different terminology for Texture Wrapping. In Metal, it is referred to as "Texture Addressing."

Applying Textures using Metal

To apply a texture using Metal, you need to create two objects; a Texture and a Sampler State object.

The Texture object contains the image data, format, width, and height. The Sampler State object defines the filtering and addressing (wrapping) modes.

Texture Object

To apply a texture using Metal, you need to create an MTLTexture object. However, you do not create an MTLTexture object directly. Instead, you create it through a Texture Descriptor object, MTLTextureDescriptor.

The Texture Descriptor defines the properties, such as image width, height and image format.

Once you have created an MTLTexture object through an MTLTextureDescriptor, you need to copy the image raw data into the MTLTexture object.

Sampler State Object

As mentioned earlier, the GPU needs to know what to do when a texture does not fit a 3D model properly. A Sampler State Object, MTLSamplerState, defines the filtering and addressing modes.

Just like a Texture object, you do not create a Sampler State object directly. Instead, you create it through a Sampler Descriptor object, MTLSamplerDescriptor.

Setting up the project

Let's apply a texture to a 3D model.

By now, you should know how to set up a Metal Application and how to render a 3D object. If you do not, please read the prerequisite articles mentioned above.

For your convenience, the project can be found here. Download the project so you can follow along.

Note: The project is found in the "addingTextures" git branch. The link should take you directly to that branch.

Let's start,

Declaring attribute, texture and Sampler objects

We will use an MTLBuffer to represent UV-coordinate attribute data as shown below:

// UV coordinate attribute

id<MTLBuffer> uvAttribute;

To represent a texture object and a sampler state object, we are going to use MTLTexture and MTLSamplerState, respectively. This is shown below:

// Texture object

id<MTLTexture> texture;

//Sampler State object

id<MTLSamplerState> samplerState;

Loading attribute data into an MTLBuffer

To load data into an MTLBuffer, Metal provides a method called "newBufferWithBytes." We are going to load the UV-coordinate data into the uvAttribue buffer. This is shown in the "viewDidLoad" method, line 6c.

//6c. Load UV-Coordinate attribute data into the buffer

uvAttribute=[mtlDevice newBufferWithBytes:smallHouseUV length:sizeof(smallHouseUV) options:MTLResourceCPUCacheModeDefaultCache];

Decoding Image data

The next step is to obtain the raw data of our image. The image we will use is named "small_house_01.png" and is shown below:

The image will be decoded by the LodePNG library in the "decodeImage" method. The library will provide a pointer to the raw data and will compute the width and height of the image. This information will be stored in the variables: "rawImageData", "imageWidth" and "imageHeight."

Creating a Texture Object

Our next step is to create a Texture Object through a Texture Descriptor Object as shown below:

//1. create the texture descriptor

MTLTextureDescriptor *textureDescriptor=[MTLTextureDescriptor texture2DDescriptorWithPixelFormat:MTLPixelFormatRGBA8Unorm width:imageWidth height:imageHeight mipmapped:NO];

//2. create the texture object

texture=[mtlDevice newTextureWithDescriptor:textureDescriptor];

The descriptor sets the pixel format, width, and height for the texture.

After the creation of a texture object, we need to copy the image color data into the texture object. We do this by creating a 2D region with the dimensions of the image and then calling the "replaceRegion" method of the texture, as shown below:

//3. copy the raw image data into the texture object

MTLRegion region=MTLRegionMake2D(0, 0, imageWidth, imageHeight);

[texture replaceRegion:region mipmapLevel:0 withBytes:&rawImageData[0] bytesPerRow:4*imageWidth];

The "replaceRegion" method receives a pointer to the raw image data.

Creating a Sampler Object

Next, we create a Sampler State object through a Sampler Descriptor object. The Sampler Descriptor filtering parameters are set to use Linear Filtering. The addressing parameters are set to "Clamp To Edge." See snippet below:

//1. create a Sampler Descriptor

MTLSamplerDescriptor *samplerDescriptor=[[MTLSamplerDescriptor alloc] init];

//2a. Set the filtering and addressing settings

samplerDescriptor.minFilter=MTLSamplerMinMagFilterLinear;

samplerDescriptor.magFilter=MTLSamplerMinMagFilterLinear;

//2b. set the addressing mode for the S component

samplerDescriptor.sAddressMode=MTLSamplerAddressModeClampToEdge;

//2c. set the addressing mode for the T component

samplerDescriptor.tAddressMode=MTLSamplerAddressModeClampToEdge;

//3. Create the Sampler State object

samplerState=[mtlDevice newSamplerStateWithDescriptor:samplerDescriptor];

Linking Resources to Shaders

In the "renderPass" method, we are going to bind the UV-Coordinates, texture object and sampler state object to the shaders.

Lines 10i and 10j, shows the methods used to bind the texture and sampler objects to the fragment shader.

//10i. Set the fragment texture

[renderEncoder setFragmentTexture:texture atIndex:0];

//10j. set the fragment sampler

[renderEncoder setFragmentSamplerState:samplerState atIndex:0];

The fragment shader, shown below, receives the texture and sampler data by specifying in its argument the keywords [[texture()]] and [[sampler()]]. In this instance, since we want to bind the texture to index 0, the argument is set to [[texture(0)]]. The same logic applies for the Sampler.

fragment float4 fragmentShader(VertexOutput vertexOut [[stage_in]], texture2d<float> texture [[texture(0)]], sampler sam [[sampler(0)]]){}

Setting up the Shaders

Open up the file "MyShader.metal."

Recall that the Fragment Shader is responsible for attaching a texture to a 3D object. However, to do so, the fragment shader requires a texture, a sampler object, and the UV-Coordinates.

The UV-Coordinates are passed down to the fragment shader from the vertex shader as shown in line 7b.

//7b. Pass the uv coordinates to the fragment shader

vertexOut.uvcoords=uv[vid];

The fragment shader receives the texture at texture(0) and the sampler at sampler(0). Textures have a "sample" function which returns a color. The color returned by the "sample" function depends on the UV-coordinates, and the Sampler parameters provided. This is shown below:

//sample the texture color

float4 sampledColor=texture.sample(sam, vertexOut.uvcoords);

Finally, we set the fragment color to the sampled color.

//set color fragment to the sampled color

return float4(sampledColor);

You can now build and run the project. You should see a texture attached to the 3D model.

If you want, you can combine the Shading Color returned by the Vertex Shader with the texture Sampled Color. (Shading was implemented in this project.)

//set color fragment to the mix value of the shading and sampled color

return float4(mix(sampledColor,vertexOut.color,0.2));

And that is it. Build the project; you should see the 3D model with a texture. Swipe your fingers across the screen to see the shading effect.

Note: As of today, the iOS simulator does not support Metal. You need to connect your iPad or iPhone to run the project.

Hope this helps.