One of the hardest features to implement in a game engine is the animation of 3D characters. Unlike animation in 2D games, which consists of sprites played sequentially over a period, animation in 3D games consists of an armature influencing the vertices of a 3D model.

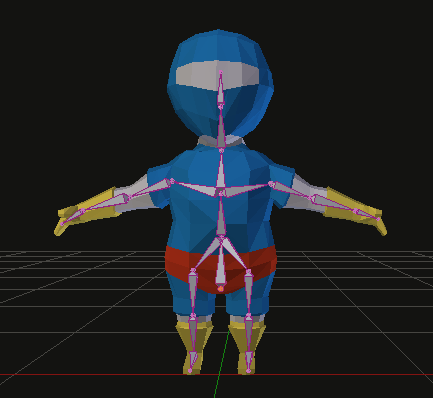

3D mesh and a bone armature

To animate a 3D model, the 3D model requires an armature. An armature is a set of 3D bones. Connecting the armature to the 3D model produces a parent to child linkage. In this instance, the armature is the parent, and the 3D model is the child.

Armature linked to the 3D mesh

Bones influence the space coordinates of nearby vertices. For example, rotating the forearm bone, transform the space coordinate of all forearm vertices in the 3D mesh. How much influence a bone has on a vertex is known as a Vertex Weight.

Rotating a bone affects nearby vertices

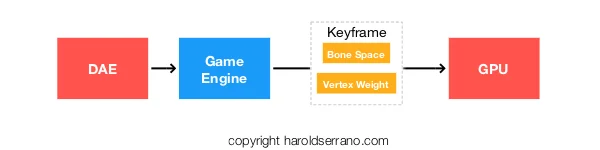

3D animations are composed of several keyframes. A keyframe stores the rotation and translation of every bone. As keyframes are played, the influence of bones on nearby vertices deforms the 3D model creating the illusion of an animation.

Animation with keyframes

The data stored in keyframes and vertex weights are exported to the game engine using a Digital Asset Exporter (DAE). When a game engine runs an animation, it sends keyframe data to the GPU.

As the GPU receives the keyframe data, the bone's vertex weight and bone's space deforms the 3D model recreating the animation.

Game engine running an animation

However, rendering only the keyframes received by the DAE may produce choppy animations. The game engine smoothes out the animation by interpolating the data in each keyframe. That is, it interpolates the bones' coordinate space between each keyframe.

Thus, even though a game artist may have produced only four keyframes, the game engine creates additional keyframes. So, instead of only sending four keyframes to the GPU, the game engine sends sixteen keyframes.

Hope this helps